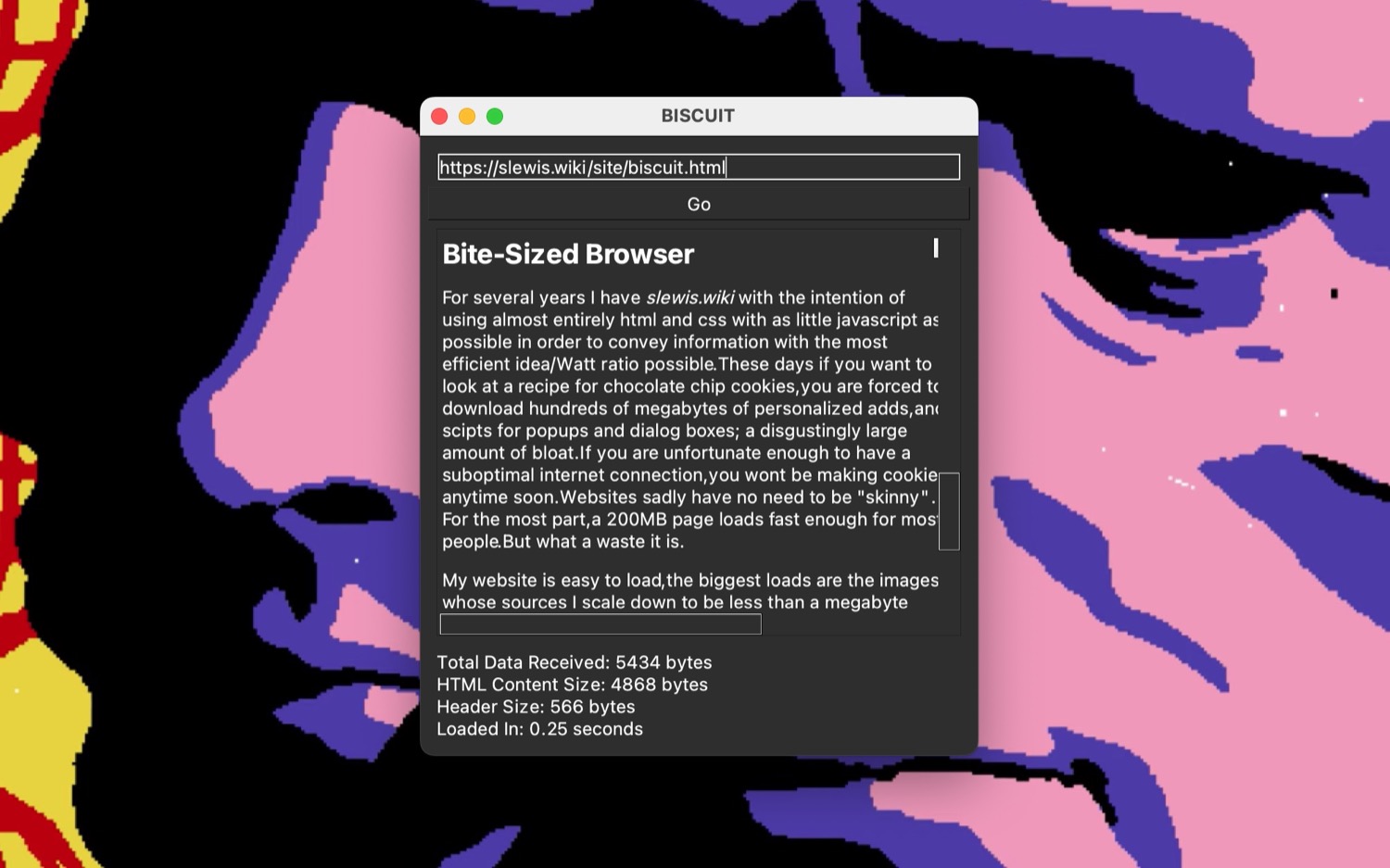

Bite-Sized Browser

BISCUIT is a bare-bones browser with a focus on minimizing user-side data downloads and processing.

For several years I have added to slewis.wiki with the intention of using almost entirely html and css with as little javascript as possible in order to convey information with the most efficient idea/Watt ratio possible. These days if you want to look at a recipe for chocolate chip cookies, you are forced to download hundreds of megabytes of personalized adds, and scipts for popups and dialog boxes; a disgustingly large amount of bloat. If you are unfortunate enough to have a suboptimal internet connection, you wont be making cookies anytime soon. Websites sadly have no need to be "skinny". For the most part, a 200MB page loads fast enough for most people. But what a waste it is.

My website is easy to load, the biggest loads are the images whose sources I scale down to be less than a megabyte each at maximum. Most are a few dozen kilobytes. But even still, you have to access my website through a browser app (most likely Chrome) which yet again forces you to consume mountains of data whether you ask it to or not. Somehow, a single page of my website that is 2kB of html and css text consumes 200MB of memory in Chrome. I don't like that.

BISCUIT in a way is a throwback to the old internet. There is no built-in search engine, you have to know the website address ahead of time. There's no javascript execution, (currently) no image rendering, and much much more (less?). I hope that in some strange way people will use BISCUIT as a reason to build simple blogs. Places and pages for simple text, shareable quickly and cleanly in the most efficient way possible. Shockingly, some big-name news sites have lightweight text-only portals like lite.cnn.com and text.npr.org that are very compatible and easy to navigate with BISCUIT.

BISCUIT is in its very early stages, there's several more things I want to add to it. If you like what you see, please feel free to fork and contribute. — Github

A New Design Perspective

Building BISCUIT so far has provided me with an opportunity to reflect on how I engage in the creative process, especially when it comes to programming. It is very easy for me to become paralyzed by the prospect of venturing into the unknown, especially when the finish line in mind is mildly amorphous. To "build a browser", my progress and inspiration could be immediately stunted with the aprehensions of my inability to come up with a complete project on the spot. I didn't know how to query and grab HTML data from a webpage, let alone how to display it to the screen. How was I going to manage every type of webpage format? All the edge cases for intrasite links?

To combat the whirpool of fearful musings, I took a few pages from The Pragmatic Programmer by Andy Hunt and Dave Thomas. Start simple. Don't worry about building a browser all at once. Baby steps: find out how to make a window appear, display some hard-coded text in it. Then expand, Google around to figure out how to make an HTML request and load (parse) the page. Oh! Turns out the library I used to make the window appear also can detect what is clicked on. I can use that to activate hyperlinks and I can re-use my page load function to load up requests to the clicked link! It's all coming together.

One such "coming together" moment was when I decided to take advantage of the plethora of LLM APIs floating around in the vast (mostly dead, imo) internet. This was an extremely fruitful endeavor as it cast a whimsical flavor over the whole project that was dearly lacking. By setting my active org/project keys in the config.yaml file (and making damn sure I don't push that to Github), I can engineer a LLM prompt with a specific task to operate on the current text in the BISCUIT window! I love the transformative capabilities this provides. For any page I access, I can instantly summarize, poetify, roast or praise the text content. I love it. I am imagining now my friends publishing a new post to their text-based blogs and me instantly roasting them on it. Incredible. Now I just need to find some friends who know how to make a website like this one... One thing at a time. There's so much more I can do with this access to AI models beyond my comprehension, I don't even know where to start. But perhaps this will do for the moment.

As the adage goes, the first step is always the hardest. But once I get going, the pieces start falling into place and my vision begins to take form. Sure the goal and purpose of BISCUIT may (see: will) change along the way, I may have to completely restart from scratch to optimize things. But that's the best part, I've already learned so much just from trying.

The most powerful mindset to retain while making a mini passion project such as this is one of self-assuredness and a bit of humility. BISCUIT will not change the world, it wont make me an over-night millionaire. It doesn't have to. I built it — an idea to a functioning, evolving vision and that is reward enough.

Future of BISCUIT

I have a couple ideas for what BISCUIT can be. As of 02M07 it is very much in its infant stages as purely a browser that does simply does not load embedded images or Javascript. To come to a point of completion on that front, I'd like to add a limited visited-page memory to implement "forward" and "back" buttons. It seems that if the CSS styling is defined in the loaded HTML page itself, some of it (like text coloring) gets resolved. But if the page is linked via the meta element, it doesn't. Part of me wants to accomodate this type of CSS implementation since it's so common, but meh. Perhaps best to keep things as clean as possible. To that end, I'd like to clean up the parsed HTML to remove any img/figure elements since they are resolved as weird placeholder sprites and I wont be downloading the linked images anyways.

I have some long-horizon goals as well. Although I am pleased with BISCUIT and its simple design, I recognize its uses are very limited. It's a browser that does less. Wow. Cool but what good does that really do? I have an idea brewing of transitioning BISCUIT to be a hybrid browser/web-crawler/bookmark-summarizer. A user would be able to define (via a config file) webpages to be scraped, summarized, and concatenated in HTML plaintext which can then be saved locally. The idea here being that regularly updated pages can serve as a daily news source. We'll see how this manifests. If anything I could tailor it specifically to the webpages I am most interested in like finance, astronomy, tech. I have partially achieved this as of 02S06 by hooking up the BISCUIT window to the OpenAI API, allowing users (like you!) to choose a task and "AI that shit" into a summary, poem, roast, or praise.